In this exercise, we worked with Fourier transforms and convolution of images using the built-in "Fast Fourier" transform function in Scilab. Fourier transforming a signal will give us a representation of the distribution of the frequencies present in the said signal. A signal can be decomposed into a set of sines and cosines, with amplitude and frequencies depending on the computation of the discrete Fourier transform.

An image is basically a signal, since it is a collection of information stored in a series of pixels. In photography, for example, information (color, brightness, etc) is transferred from the subject to the pixels of the camera. Depending on the properties of a portion of the subject, the region of the camera's sensor will store a corresponding signal. After processing these signals, we are able to view the subject in a photograph, which would hopefully be as colorful (or vibrant or whatever) as the subject.

Anyways, so there. Scilab has the function fft() and fft2() to perform a discrete Fourier transform, for one-dimensional and two-dimentional signal respectively. In our case, since we're dealing with images, which are 2D, we will be using fft2. Additionally, these two functions also perform the inverse Fourier transform, which is quite handy.

Figure 1. Transformation of two images to frequency space and back to the original.

Side note: It took me so long to finish this exercise because I can't figure out the problems in my Scilab code. Actually there were no errors. It's just that I didn't use the function mat2gray(), which converts a matrix (containing the information from the Fourier transformed image) to a grayscale image. Meryl told me to add this function to create the desired image of the Fourier transform and for the other images. Without her suggestion, I wouldn't be able to do the entire exercise. Seriously hahaha.

Figure 2. Conversion of the matrix to an image using mat2gray()

Going back to Figure 1, after performing Fourier transform, we still need to use the function

fftshift(). As seen in the second column of Figure 1, the white regions, which contain the information from the Fourier transform, are distributed to the four corners of the image. Using fftshift() forces these white regions to be placed at the center of the image. As seen on the Fourier transform of the circular aperture, the result is an Airy pattern. Also, the inverse Fourier transform of the Fourier transform of the image containing the letter "A" is inverted, as opposed to the original image.

Simulation of an imaging device

Due to the finite sizes of the optical components of an imaging device, a photograph can have a lot of differences from the subject. The quality of a photograph differs from the subject as a result of the latter's convolution with the transfer function of the imaging device. A set of lenses with large diameters will produce sharper images compared to small diameters, as more rays from the original image will be allowed to pass through, which will then contribute to a wider range of information.

If we have a convolution of two functions, we can obtain the result from the multiplication of the Fourier transform of the subject and the Fourier transform of an imaging device. Afterwards, we perform inverse Fourier transform on the product.

In an optical system, lenses automatically perform Fourier transform on an image [1]. We can think of it as the aperture being already at the Fourier plane, and we simply multiply it with the Fourier transform of the image.

Figure 3. Simulation of an imaging device of different aperture diameters.

As expected, those with smaller diameters produced blurred images compared to those with larger diameters.

Template matching

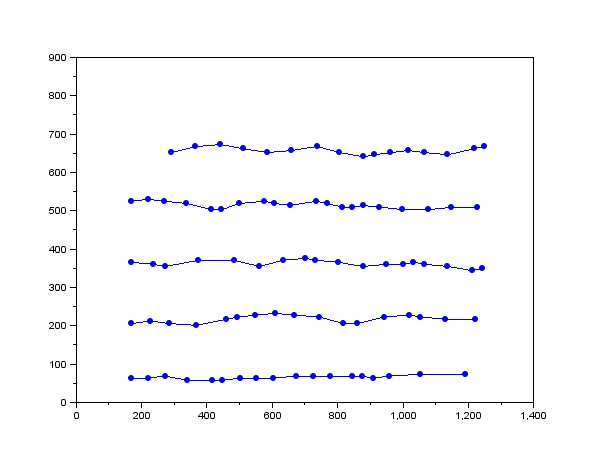

We can also perform template matching using correlation. Similar with the previous one, we work with the Fourier transforms of two images. In this part, we use an image containing the text:

THE RAIN IN SPAIN STAYS MAINLY IN THE PLAIN

and a template image, of the same size as the first image, containing only the letter "A", which is what we need. Using correlation, we can determine the location of the letter "A" (from the template) on the first image. For both images, the font sizes and styles are similar.

However, instead of multiplying the Fourier transforms right away, we first perform complex conjugation (using the function conj() ) on the template image.

Figure 4. Text image and template image

Using the described method, I got this image:

Figure 5. Correlated image

By correlating the two images, the peaks (indicated by the bright spots) occurred at the locations where A is found on the text image. As you can see, the letter A is highly smeared on every point on the letters from the text image, causing the blur on the correlated image. At the regions where the template smeared exactly on the same letter, bright spots formed, indicating a strong correlation.

Performing thresholding on Figure 5, I was able to locate the bright spots:

Figure 6. Thresholding of the correlated image

This was produced using the function SegmentByThreshold(), with the threshold set to 0.8. These bright spots are the locations of the letter A in the text image.

Edge Detection

Edge detection can also be performed by convolving an image with a 3x3 matrix of a specific pattern. The matrices that were created consist of elements having a sum of zero. In the following image, we see each matrix, followed by a 128x128 pixel image with the matrix at the center, and the resulting image after convolving with the original image (as seen in Figure 3).

Figure 7. Convolution of the Fourier transform of the original image and a 3x3 matrix.

The first matrix was able to detect the horizontal edges of the image containing the word "VIP" while the second matrix was able to detect the vertical edges. In addition, the diagonal lines were also detected by the second matrix since these lines are composed of short vertical lines. The final matrix was able to detect both the vertical and horizontal edges on the original image.

For this activity, I would give myself a grade of 10 since I was able to perform the activity correctly.

Reference

Activity 7- Fourier transform model of image transformation. M. Soriano. 2013.